I recently oversaw a project for a large petrochemicals company, where we identified a potential 500% saving in capacity in a highly-used VMware cluster. I was gobsmacked at the over-allocation of capacity for this production environment, and decided to share some pieces of advice when it comes to capacity analysis in VMware.

Memory utilization is a little bit more challenging given the diversity of the VMware metrics. Memory consumed is the memory granted to the physical OS, but if the data collection period includes an OS boot - will be distorted due to the memory allocation routines. Memory active is based on "recently touched" pages, and so depending on the load type may not capture 100% of the actual requirement. Additionally, there is a host overhead which becomes significant when the number of virtual machines reaches a crucial level. Memory figures are further distorted by platforms like java, SQL server or Oracle who consume memory in hamster-fashion for when it may be useful. For these purposes, it may also be relevant to consider OS-specific metrics (such as from performance monitor). It now seems as if the capacity manager should be using a combination of these metrics for different purposes, and should refine their practise to avoid paging (the symptom of insufficient memory). .

How to find the savings in your VMware environment

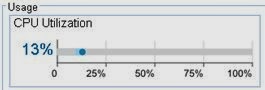

Hook into your VMware environment, and extract CPU utilization numbers for host, guest and cluster. Use the logical definitions of VMware folders or the cluster/host/guest relationships to carve meaningful correlations. Be careful with heterogeneous environments, not all hosts or clusters will have the same configuration - and configuration is important. Use a tool like CA Capacity Management to provide a knowledge-base of the different configurations so you can compare apples and oranges. Overlay all the utilization numbers and carry out a percentile analysis on the results - the results here represent a 90th percentile analysis, arguably a higher percentile should be used for critical systems. Use the percentile as a "high watermark" figure and compare against average utilization to show the "tidal range" of utilization.Memory utilization is a little bit more challenging given the diversity of the VMware metrics. Memory consumed is the memory granted to the physical OS, but if the data collection period includes an OS boot - will be distorted due to the memory allocation routines. Memory active is based on "recently touched" pages, and so depending on the load type may not capture 100% of the actual requirement. Additionally, there is a host overhead which becomes significant when the number of virtual machines reaches a crucial level. Memory figures are further distorted by platforms like java, SQL server or Oracle who consume memory in hamster-fashion for when it may be useful. For these purposes, it may also be relevant to consider OS-specific metrics (such as from performance monitor). It now seems as if the capacity manager should be using a combination of these metrics for different purposes, and should refine their practise to avoid paging (the symptom of insufficient memory). .

It is also worth reviewing the IO figures from a capacity point of view, although there is a little more work required in determining the capacity of the cluster, due to protocol overheads and behaviours. The response time metrics are a consequence of capacity issues - not a cause, and although important, are a red herring when it comes to capacity profiling and right-sizing (you can't right-size based on a response time, but you can right-size based on a throughput). I've disregarded disk-free stats in this analysis - which would form part of a storage plan, but check on the configuration of your SAN or DAS to determine which IO loads represent a risk to capacity bottlenecks.

The Actionable Plan

Any analysis is worthless without an actionable plan, and this is where some analytics are useful in right-sizing every element within that VMware estate. CA Virtual Placement Manager gives this ability, correlating the observed usage against the [changing] configuration of each asset to determine the right-size. This seems to work effectively across cluster, host and guest level - and also incorporates several 'what if' parameters such as hypervisor version, hardware platform (from it's impressive model library) and reserve settings. It's pretty quick at determining what is the right size of capacity to allocate to each VM - and how many hosts should fit in a cluster, even factoring in forecast data. Using this approach, a whole series of actionable plans were generated very quickly for a number of clusters - showing capacity savings of 500% and more.